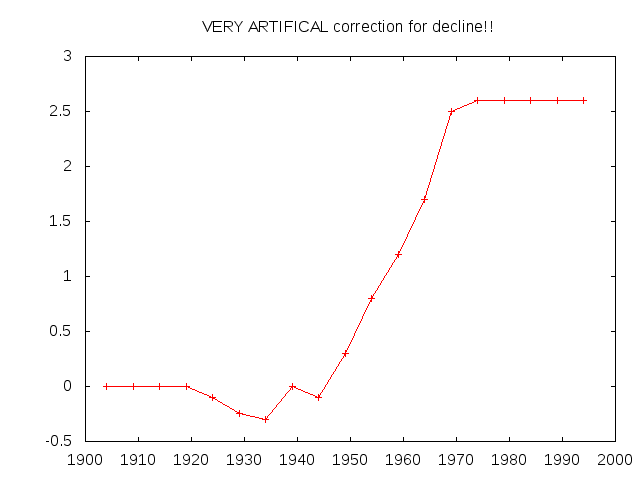

From the CRU code file osborn-tree6/briffa_sep98_d.pro , used to prepare a graph purported to be of Northern Hemisphere temperatures and reconstructions.

; ; Apply a VERY ARTIFICAL correction for decline!! ; yrloc=[1400,findgen(19)*5.+1904] valadj=[0.,0.,0.,0.,0.,-0.1,-0.25,-0.3,0.,- 0.1,0.3,0.8,1.2,1.7,2.5,2.6,2.6,$ 2.6,2.6,2.6]*0.75 ; fudge factor if n_elements(yrloc) ne n_elements(valadj) then message,’Oooops!’ ; yearlyadj=interpol(valadj,yrloc,timey)

This, people, is blatant data-cooking, with no pretense otherwise. It flattens a period of warm temperatures in the 1940s 1930s — see those negative coefficients? Then, later on, it applies a positive multiplier so you get a nice dramatic hockey stick at the end of the century.

All you apologists weakly protesting that this is research business as usual and there are plausible explanations for everything in the emails? Sackcloth and ashes time for you. This isn’t just a smoking gun, it’s a siege cannon with the barrel still hot.

UPDATE2: Now the data is 0.75 scaled. I think I interpreted the yrloc entry incorrectly last time, introducing an off-by-one. The 1400 point (same as the 1904) is omitted as it confuses gnuplot. These are details; the basic hockey-stick shape is unaltered.

Huge tits

?ll that the enormous tits are here bouncing for the camera. It’s possible to see boobs and all of them nude. Chose ebony or white, receive a sexy version with natural breasts or nice fake bests. Do whatever you enjoy, and also have all the fun you’ll have with nice porn models. A number of them are from your nation, others are not. That means you’re able to see the series with any kind of woman.

We love boobs

Boobs are good situation to play. Videos are hype online. So we like mega boobs constantly and glad to present you the world of huge breasts. Only have a look, it’s like the Mardi Gras but on your room.

Mega boobs are bouncing if she makes and just moves something common like texting, typing or drinking. A man turns on. Or can be addictive so that you join the cam and start all things with your favorite breasts movie. Big tits will turn us, and make even a bad day better. It.

We welcome all kinds of tits because different Men and Women love them different ways:

- Big breast on a chubby college girl which are tight and soft and appearing as white as some clouds on the market;

- Huge boobs on a latina hottie which maybe fake but nevertheless are the hottest items you have ever noticed;

- Mature boobs of some hot milf or even a granny;

- Big boobs on a lean and fit body;

- Large breasts of some BBW which are enormous enough she’ll lick her own nipples;

- Natural boobs of All of the shapes and forms

You all can view them all in the porn chat. Busty babes are here to chat, make shows and discuss their lovely bodies.

Massive boobs or megamastia

Massive boobs are rarely belong to lanky woman. But the character does wonders. And you can locate a busty girl in almost any area. Some models reside in the countries so you can not just see them showing off their bodies but practicing vocabulary skills.

How folks become a busty version. They need to get born as girl. Then — combine a cam service. We provide a place for girls who want to show off their gorgeous large breasts. Some versions are famous through instagram or twitter. They can reveal themselves and advertize on the media. So this women will find a lot of fans on the webcam service and are porn stars.

Those girls aren’t rare. They can be found by you in almost any nation and become acquainted to some girls in the chat.

Giant breasts are more famous than a woman herself. They are submitted as gif files everywhere and people don’t even know who’s shaking their assets that are hot. The attractiveness of the webcam is that you do fun things, can chat and ask the woman to do some fun stuff and not only proceed through the scenes. The internet chat is like the actual life communication. It sense hotter than in actual life and may be fun and hot.

The Frequent stuff you see in the camera

You can see here some things which is not strictly sexual. A woman is sitting communication with her followers and doing things that are sexy but not considered One of the huge boobs models trained her body leaping on the match ball. Others are speaking or writing remarks. The folks here are creating the real life chatting although not only reveal. Or maybe just a bit hotter than the true life chatting and each of the items can turn on each other.

Are the boobs naked? Some women are putting on fancy gowns and stuff. Some are as natural as they wanted to be. Being naked is their choice however, you can spend.

Some busty babes love to work in pairs and oil every other, grab the titties and massage them as the dialogue becomes hotter. Girls from the webcam series are in the play and understand what to do to give you all the satisfaction you want here.

Some busty girls just love to perform dildos. And yeah the tittyfuck is demanded. See them riding the cock, bouncing and using orgasms for the camera.

Why men love busty babes

We accumulated the scientific data for you to know why the desire to see the nude breasts is totally fine and means that you are a real guys. A busty mama can not be too young and immature for sex. The tits are sexy and cannot lie that a woman is too young to get laid.

And the next explanation is that huge tits are appearing trendy because of their shape. And you can use them as a pillow when you sleep. Women conceal their boobs under the wear. They place a lot of clothes and a bra. And here on the xxx chat cam you can see the big boobs.

Big boobs are natural sexual vibra toy. You use them as addresses may play with them and enjoy titty fuck. Big boobs are cool but online shows with them are cooler. You can join the conversation to enjoy a sex show with a busty lady. Each of the web models are cool and sexy and have great talents in showing themselves off.

So busty babes are looking healthy and attractive and everyone can enjoy them in the conversation.

You can do a girl with breasts good. Read through the chat and discover a girl who attempts to work to get a job. It is possible to go private donate her tokens for the sex show and make everybody happy and enjoy their new body. You watch her transformation and jet a tour of their boobs here. Alright, fake breasts are hated by some people today but we must help and prepared to enjoy all kinds of imitation boobs all the time. Natty become more out of shape. A number of them even have stretch marks and things like the big veins; individuals who adore big boobs are enjoying them free and natty or around and fake. Both breasts are bouncy and all fun but we all have our tastes.

Young of older?

We’ve got a good deal of mature BBWs with big natural boobs here. They are experienced and know how to turn a guy on. Melons are being hung like by their monster boobs and they’re ready to have the best sex of their life since they know how to get it done. Section of this tits graph is amazing and the chat members will need to see it at least once.

Yong girls with large tits are cool also. We can enjoy this gaze of youth. And have curves and they are naturally born to be amazing.

Men will love huge tits. They’re feminine, sexy and turn anyone on, You could join the conversation or browse through the cams to view all of the busty models , Switch cams as you enjoy it you are free to enjoy all the busty things , You can just see what’s going on in the chat, have virtual sex with the version of choice, watch porno chatting at the public space or join the dialogue. Any person can choose the way to participate on the porn chat and enjoy the show occurring here.

You can see the cam women. Couple where the woman chose whatever you like and also that which make you hard and is busty.

Do your thing with all the HD sex chat and never underestimate the talking. It can client potentiel to the real orgasms and make you cum as hard as you want when you search the web for a babe.

Some males also hunt the big breasts infant in their region to create a connection of some sort perhaps not only a random net sex chatting. But it is not necessary. You chose the form of relationship.

Join the conversation today to observe the hugest tits on the web. New faces, shows along with the satisfaction is guaranteed.

UPDATE3: Graphic is tenmporily unavailable due to a server glitch. I’m contacting the site admins about this.

From the “I hate to be that guy” dept., a minor typo correction: Sackloth →

Sackcloth

How did you find this? Just grepped for ‘fudge factor’?

Are you referring to the black curve onthis temperature reconstructions graph? The primary sources are on the image description page. The black curve (CRU’s data) does seem to be cooler in the 40’s and hotter in the 2000’s than the other data sets…

OMG.

oops… the black curve is the instrument record, not a reconstruction… The blue curve might be the culprit, because it’s the one by Briffa published in 1998, but of course I’m just speculating.

>How did you find this? Just grepped for ‘fudge factor’?

There was a brief note about it in a comment on someone else’s blog, enough to clue me that I should grep -r for ARTIFICAL. I dusted off my Fortran and read the file. Whoever wrote the note had caught the significance of the negative coefficients but, oddly, didn’t notice (or didn’t mention) the much more blatant J-shaping near the end of the series.

You know, I always knew all this was bs. Not because I had any data: Sometimes I can just smell the bs in the air.

Good heuristic: any time you hear these kind of hysterical claims that make it sound like the world is coming to an end, they are lying.

This whole thing is a scam to control our lives.

Yeah, “Nothing unethical about a ‘trick'”, they say. How they consider themselves even remotely ethical (or credible) after circumventing FOIA requests and this sort of fudgery is beyond me.

Thankfully, this is finally getting some press.

Oh, yeah, account for the fact that some of them can actually spell. Grep for ARTIFICIAL also :)

New post up on Data & Demogogues that covers a different aspect of this fracas. The skeptic’s side has its own instances of idiocy going on.

I’ve been trying to puzzle out for myself what this code is actually trying to do. My IDL-fu is effectively non-existant however…

If you expand ESRs original quote a little bit you get…

plot,timey,comptemp(*,3),/nodata,$

/xstyle,xrange=[1881,1994],xtitle='Year',$

/ystyle,yrange=[-3,3],ytitle='Normalised anomalies',$

; title='Northern Hemisphere temperatures, MXD and corrected MXD'

title='Northern Hemisphere temperatures and MXD reconstruction'

;

yyy=reform(comptemp(*,2))

;mknormal,yyy,timey,refperiod=[1881,1940]

filter_cru,5.,/nan,tsin=yyy,tslow=tslow

oplot,timey,tslow,thick=5,color=22

yyy=reform(compmxd(*,2,1))

;mknormal,yyy,timey,refperiod=[1881,1940]

;

; Apply a VERY ARTIFICAL correction for decline!!

;

yrloc=[1400,findgen(19)*5.+1904]

valadj=[0.,0.,0.,0.,0.,-0.1,-0.25,-0.3,0.,-0.1,0.3,0.8,1.2,1.7,2.5,2.6,2.6,$

2.6,2.6,2.6]*0.75 ; fudge factor

if n_elements(yrloc) ne n_elements(valadj) then message,'Oooops!'

;

yearlyadj=interpol(valadj,yrloc,timey)

;

;filter_cru,5.,/nan,tsin=yyy+yearlyadj,tslow=tslow

;oplot,timey,tslow,thick=5,color=20

;

filter_cru,5.,/nan,tsin=yyy,tslow=tslow

oplot,timey,tslow,thick=5,color=21

I read this as being responsible for plotting the ‘Northern Hemisphere temperatures and MXD reconstruction’.

Note however the commented out code. The way i’m reading this, any graph titled ‘Northern Hemisphere temperatures, MXD and corrected MXD’ with a thick red line released before the brown matter hit the whirly thing probably has cooked data. Likewise if you see a thick blue line you might be ok.

Where do i get thick red line from?

According to the IDL Reference Guide for IDL v5.4, thick=5 means 5 times normal thickness. and color=21 links to (i believe)

def_1color,20,color='red'

def_1color,21,color='blue'

def_1color,22,color='black'

from just above the code segment itself.

However there’s one thing that i’m not sure about without either being able to play with IDL or seeing the end graph. It does actually plot the uncooked data in black (oplot,timey,tslow,thick=5,color=22) so whats the point of showing a cooked data line along with the uncooked dataline? Not to mention that the uncommented version apparantly prints the same data series twice.

bah… and if i’d just looked again at the lower plot call, what it was doing might’ve make sense.

The red (cooked) line is labelled as “Northern Hemisphere MXD corrected for decline’. So if the line labelling matches the comment i’d be worried how much of a smoking gun it is.

Decline in instrument accuracy perhaps?

>Decline in instrument accuracy perhaps?

Reminder: Here’s Phil Jones writing to Ray Bradley and friends: “I’ve just completed Mike’s Nature trick of adding in the real temps to each series for the last 20 years (ie from 1981 onwards) amd from 1961 for Keith’s to hide the decline”

The output of this program may have been their check to see if a visualization of the cooked data wouldn’t look obviously bogus before they shopped it to the politicians and funding sources. That’s the only way I can think of to explain plotting both crocked and uncrocked datasets in the same visualization.

I wonder what the odds are that Ian Harris gets thrown under the CRU bus: “We had NO idea the data was so awful…he should have consulted with us…” etc.

>I wonder what the odds are that Ian Harris gets thrown under the CRU bus:

Dangerous move. If he got backed into a corner, he might decide to rat out the cabal.

I had already had the thought that, if this was an inside job, Harris is the most plausible candidate for being the leaker.

@Darrencardinal: my personal heuristic is that if the solution to a proposed problem is the rollback of western civilization, the problem probably doesn’t exist.

>if the solution to a proposed problem is the rollback of western civilization, the problem probably doesn’t exist.

I have a closely related heuristic: any eco-related scare for which the prescription would result in a massive transfer of power to the political class is bogus.

>Dangerous move. If he got backed into a corner, he might decide to rat out the cabal.

Sure, but at that point it can be painted as a he said/she said situation, which would provide some cover that the MSM could utilize. Also, if he’s a true believer, which seems possible, he might just take the bullet.

> I had already had the thought that, if this was an inside job, Harris is the most plausible candidate for being the leaker.

What do we know about him, besides what little the CRU site says? According to http://www.cru.uea.ac.uk/cru/about/history/people.htm he’s been at the CRU since 1996. He does have his name on 3 papers from the CRU: (http://www.cru.uea.ac.uk/cru/pubs/byauthor/harris_ic.htm) concentrating in tree-ring interpretation, which matches his job role descriptions.

@JonB: there is a GNU version of IDL, see http://gnudatalanguage.sourceforge.net/. I am compiling it right now under OpenSuse 11.1. If it produces any results I don’t know yet…

Pjotr

> I have a closely related heuristic: any eco-related scare for which the

> prescription would result in a massive transfer of power to the political

> class is bogus.

I would have thought that the ‘eco-related’ qualifier is a bit unnecessary there. You could apply that heuristic to almost every topic on your blog (admittedly the forge-scraper/data jailing link is a bit of a stretch).

I’ve always preferred the term “finagle factor”, but to each his own. I had an instructor in college who often employed the “eraser factor”.

Isn’t that just wishful thinking, in that you’re begging the question of whether or not critical and extraordinarily costly threats to civilization can exist in the first place?

Wait just a second. Explain this to me like I’m 12. They didn’t even bother to fudge the data? They hard-coded a hockey stick carrier right into the program?!!

ESR says: Yes. Yes, that’s exactly what they did.

There’s way more – the archive is a target rich environment. It’s clear from a short reading that this has never been QA’ed at all – no design or code reviews, and no testing. It’s a hack, in the worst sense of the word.

With trillions of dollars riding on it. No wonder they resisted the FOIA request.

There’s good reason to suspect the data, as well as the code. Even ignoring urban heat island effects, it looks like “adjustments” made to the raw data may account for most (or possibly even all) of the 20th century’s warming. CRU conveniently “lost” the raw data – seems they didn’t have enough disk space, and nobody knew how to spin a backup tape. Or something.

Edmund Burke—

If an idiot were to tell the same story every day for a year, we would in the end believe him. Then we will defend our error as if it were our inheritance.

Wouldn’t we have to know that this code was actually used for something, say a published paper, before it could be considered a smoking gun? Without that, it seems that the most you can say is that someone was possibly contemplating releasing distorted results.

My question is how do you tie the code to actual published results?

You may know that realclimate.org has comments on what appears to be this specific issue: you’re talking about a “VERY ARTIFICIALcorrection” in “osborn-tree6/briffa_sep98_d.pro”, they mention at http://www.realclimate.org/?comments_popup=2019#comment-144081 a “briffa_sep98_e APPLIES A VERY ARTIFICIAL CORRECTION FOR DECLINE osborn-tree4” with realclimate.org’s usual bracketed response:

[Response: All this is related to a single kind of proxy – maximum

latewood density (MXD) whose problems have been discussed in the literature

since 1998. If you want more variety in proxies, go to the NOAA Paleoclimate

pages and start playing around. They have just set up a homogenous

set of proxies that anyone can use to do reconstrucitons in any way

they like. Knock yourself out. – gavin]

If I understand correctly, he’s saying that what you found is indeed a VERY ARTIFICIAL CORRECTION on one of the ways that some trees can be used as “proxy thermometers”, one which has been openly discussed for a decade, where nobody understands why some tree data doesn’t match other tree data or the actual thermometer data where that’s available. This could be a “siege cannon with the barrel still hot” fact about conspiracies, or it could be an utterly unimportant fact about certain kinds of trees; it depends on how the correction was used. “Gavin” (that’s http://en.wikipedia.org/wiki/Gavin_Schmidt says that all his code and data are open; as a programmer who made fun of climate models back in the 80s (I was an asst prof of computer glop at UDel at the time) but hasn’t done so lately, I’d be most interested to see interactions between the two of you.

They didn’t just cook the data; they marinated it for a week, put on a rub, laid it in the smoker for a day and a half, sliced it up, wrapped it in bacon, dipped it in batter, rolled it around in flour, and deep fried it.

They’re Brits. That still doesn’t compare with American barbecue!

This is not some random crack from the outside. This is almost certainly an inside leak. 61 Mb is nothing. The probability that any 61 Mb of data, pulled off a file or email server, containing this much salient and inculpatory information is virtually nil. This data was selected by someone who knew what they were doing, what to look for and where to find it.

“The whole aim of practical politics is to keep the populace alarmed (and hence clamorous to be led to safety) by menacing it with an endless series of hobgoblins, all of them imaginary.” – H.L. Mencken

It also matches the graph labels and the comments. MXD refers to maximum latewood density, which is particular factor in studying tree growth rings. It seems this particular program is plotting a comparison between MXD reconstructed temperatures and actual recorded temperatures.

Also, @esr

That code isn’t any Fortran dialect I’m familiar with. I think JonB said something about IDL about, and it does, in fact, look vaguely OMG IDLish.

>That code isn’t any Fortran dialect I’m familiar with. I think JonB said something about IDL about, and it does, in fact, look vaguely OMG IDLish.

You’re right, but at first glance it looked just enough like Fortran 77 that I assumed it must be some odd variant of same. Doesn’t matter; whatever it is, it’s quite readable.

And, in fact, it appears I wasn’t even entirely wrong. Reading the IDL docs, it looks like the language was designed to be least surprising to scientific Fortran programmers.

I take that back about OMG IDL…. OMG IDL uses “//” for comments, so that’s not it either. I think it may be this IDL, which is a scientific graphics package.

One of my metrics:

If a paper is submitted by a scientist who’s listed as the “Chief Scientist” of a Washington DC advocacy group, advocacy comes before science in their priority list. This applies to both sides of the divide.

Would it be possible for you to rerun this using constant data as an input and graph it? That is, input a straight line and see what the output is? I think that this would provide a good way for people to get an handle on what’s going on. If the variance is small, then perhaps it is a legitimate correction for some abnormality (ignoring that we don’t know what it is and that it’s undocumented). If, however, it is largely responsible for the blade of the hockey stick , we can be reasonable certain that the whole thing has been cooked.

>Would it be possible for you to rerun this using constant data as an input and graph it?

Yes, actually. I’ll see what I can do with gnuplot. Just plotting valadj against date ought to be interesting. 5 year intervals starting from 1904, I think; that would end the graph in 1999 and the code is dated Sep 98.

Here’s the data. Plot when I can free some time from preparing for GPSD release.

Hm, actually this turned out to be easy. Grab the file, put it in (say) “artifical.plot” and just type plot “artifical.plot” at the gnuplot command line.

Borepatch:

> It’s clear from a short reading that this has never been QA’ed at all – no design or code reviews, and no testing. It’s a hack, in the worst sense of the word.

> With trillions of dollars riding on it. No wonder they resisted the FOIA request.

That kind of quality is not unusual for code written by scientists with no training in computer science or software engineering. Pretty much the only thing that matters is getting the calculation right (hmm… or not, as the case may be). You don’t get style points for usable/flexible/elegant code when you publish the paper. A lot of the stuff is terrible, even the packages with expensive licenses. I’ve seen a C program that used ‘YES’ and ‘NO’ strings for booleans and strcmp for comparing the values, in time-critical code (the code was written in an academic group in the molecular modeling / drug discovery field).

Pardon my ignorance, but what’s that “*0.75” doing in the valadj definition? Does that imply that that multiplier gets applied to each element in the array?

ESR says: Yes, I think so. The effect would be to decrease the extrema of the graph while preserving its shape.

esr… another tip from another blog:

> From the file pl_decline.pro”: “Now apply a completely artificial adjustment for the decline only where coefficient is positive!)”

More to grep

Having worked in R&D labs for two separate Fortune 500 companies, yes, I totally agree. Chemists and metallurgists and physicists tend to write very ugly code with little or no documentation. Lots of stuff hard-coded, doing moronic stuff like your example with converting numbers to strings in places it makes no sense to do so, etc.

OTOH, I’ve seen bad code in open source programs, too, but usually it’s quick-sketch code that just kinda stuck around and the authors usually know how bad it is and plan on rewriting it — just, you know, later. ;) Many scientist programmers I’ve seen actually think they are good coders. But you try explaining to them that “it works” is insufficient criteria for establishing code quality…

Since the divergence problem has been openly discussed in the literature and at conferences for many, many years, and since it has been and is an area of active research (why do some tree ring sequences show this while others don’t? what growth factor has come into play in the last few decades for those that do show it?), I should think the barrel would be quite cool by now …

I think the “smoking gun” here is that some people have just been exposed to some of the issues for the first time and think they’ve uncovered some big secret, so secret that, well, you know, umm, it’s like in the literature. A secret smoking gun hiding right there in plain, published view – imagine that!

Oh, BTW, the “decline” in this context is another term used to describe the “divergence problem”.

The “blatant data cooking” is to use the actual thermometer data where it’s available, which, of course, shows no decline over those decades …

Nothing “secret” about this at all, as mentioned above it’s been openly discussed for years.

>The “blatant data cooking†is to use the actual thermometer data where it’s available, which, of course, shows no decline over those decades …

Oh? “Apply a VERY ARTIFICAL correction for decline!!”

That’s a misspelling of “artificial”, for those of you slow on the uptake. As in, “unconnected to any fucking data at all”. As in “pulled out of someone’s ass”. You’re arguing against the programmer’s own description, fool!

In fact, I’m quite familiar with the “divergence problem”. If AGW were science rather than a chiliastic religion, it would be treated as evidence that the theory is broken.

Or, perhaps, plotting both shows you the divergence which is umm the heart of the divergence problem.

Like – here are the diverging tree proxy results plotted next to the corrected (with real temperature data) results for these decades in which the two time series diverge.

Do you guys really think you can understand the field of dendro paleoreconstructions of climate by reading one graphing program and some e-mail taken out of context? There have been people spending their careers doing this (though AFAIK not a large number), and a body of published literature. Don’t you think looking at that literature might be something to do before accusing people of outright scientific fraud?

The fudge-factor’s derived from the instrumental record.

I don’t expect the paranoids who are convinced all of climate science is one huge fraud to believe it, but hell, your paranoia ain’t going to change the science.

>The fudge-factor’s derived from the instrumental record.

“VERY ARTIFICAL”

But keep digging. It’ll just bury you deeper.

At best, it might be evidence that building reliable temperature proxies using tree ring data is a hopeless endeavor.

But given that CO2’s role as a GHG has been known to physics for roughly 150 years, it’s hard to see how that’s going to be overturned. Or do you think the divergence problem trumps physics?

OK, engaging ranting lunatics has been humorous for the last half hour or so, but I’m done here.

Have fun with your conspiracy theories and your misreading of science!

I have to say the CRU’s work has had a funny aroma around it for several years now. When I heard a couple years ago that they were refusing to make their data and algorithms available to other scientists, the sneaky, nasty, and obvious thought crossed my mind: “Guess they don’t dare show their work.” And for me, that was the end of their credibility.

>“Guess they don’t dare show their work.†And for me, that was the end of their credibility.

And, of course, they now claim that crucial primary datasets were “accidentally” deleted.

After reading some of the emails about evading FOIA2000 requests…accidentally, my ass.

krygny – Looking at the paths/filenames I’ve seen, I think the speculation I read earlier today is correct.

The data here had been assembled to fulfill a FOIA request.

And then when it was denied, it was probably leaked.

(There’s a non-zero chance that it was a fortuitously-timed bit of hacking, or that a hacker had gained entry some time ago and waited to see… but a leak seems more likely.

Defintely not just a random data-grab by a hacker, but…)

esr: Even beyond the probability of it being a deliberate evasion of the FOIA request… it’s astounding incompetence to let the data get deleted.

I keep my worthless personal data backed up redundantly and offsite… and these guys doing (in theory) real scientific research, with professional funding, with high stakes “for the world” and all of that… can’t keep their primary data sets intact?

I’m not sure what’s worse; the idea that they’re this corrupt, or the idea that they’re that incompetent.

“But given that CO2’s role as a GHG has been known to physics for roughly 150 years…”

To what degree? Is there a saturation point? Are there other effects that have much greater magnitudes?

And what does that fact have to do with the complete lack of scientific ethics among climate researchers? Read the emails — they were gaming peer review, black-listing researchers who didn’t toe the line, destroying data rather than submit it to review…

That’s not science. Anyone who thinks science is a worthy endeavor should be disgusted at the behavior these emails show.

Morgan,

“Chemists and metallurgists and physicists tend to write very ugly code with little or no documentation.”

Can we say being good at math makes it less likely you write readable code? The reason I’m asking that is that because I’m exactly the opposite extreme. I’ve always avoided math and the hard sciences because I just cannot process highly succint symbolic expressions like p=(s-c)/s*100 and I feel totally scared. Add a Greek letter to it and I’ll by running home to mom. But write it out properly like profit_percentage=(sales-costs)/sales*100 and I understand it in an instant because I will know what it really means, I no longer need to process it symbolically but can peg it to real-world logic and experience. Which means I suck at math and hard sciences even on a high school level but of course I write excellently readable code, I simply have to or I’d totally lose all clue at the fourth line. So I suspect people on the other end of the scale who don’t have any problems with succint symbolical expressions which aren’t tied directly to real-word common-sense experience, and therefore have no problems in becoming hard scientists may be less inclined to write readable code. Does it sound likely?

Thank you for looking at this. It is amazing you have time to do this kind of code archaeology, but so much better.

Keep grepping! :-)

‘I think the “smoking gun†here is that some people have just been exposed to some of the issues for the first time and think they’ve uncovered some big secret, so secret that, well, you know, umm, it’s like in the literature. A secret smoking gun hiding right there in plain, published view – imagine that!’

As a wandering archaeologist who off and on encounters tree rings, I think the “smoking gun” is the peculiar idea that tree rings would be particularly sensitive to temperature. The general view outside a tiny community of climatologists who consider the Mediaeval Warm Period paleoclimate – it’s historic and isn’t “paleo” anything really – is that temperature can account AT BEST for far less than half the variability in tree ring density. Worse, the amount varies within the SAME tree. There is no way to reliably convert tree rings to temperature. They are much more sensitive to rainfall but even there not much more than half the variability is accounted for.

“But given that CO2’s role as a GHG has been known to physics for roughly 150 years…â€

So? Are you trying to measure CO2, or temperature? If the former, why are you adjusting for thermometer readings? Since when is CO2 measured with a thermometer? I’d have thought that prefix “thermo-” would give you a clue to what it measures.

From a software project perspective – this code/project is utterly appalling.

It’s dailywtf.com stuff.

Now, this might be funny or interesting if it were a small commercial project etc.

But this code – the results it generates – is being used as a major foundation for trillions of $ of new taxes and unprecedented worldwide regulation.

If that’s the case. It better be bloody good stuff. It better have been reviewed. It better be checked, double checked. Documented, explained and tested.

But it’s not.

It’s the worst code/project I’ve seen all 2009. It’s a joke.

Showstopping bugs. No tests. Manual adjustments required before each run. Can’t repeat program results. No structure. No documentation, no source control. No build scripts.

Both skeptics and alarmists feel that climate change is incredibly important. Whatever “side” you think yourself on – you’ll agree its important.

Given the importance of this issue – all the code and data needs to be released into a public repository, refined into a project that actually works (i.e documentation / data and build scripts anybody?)

This way it can be inspected by more than a few pairs of eyes.

And we can be sure that whatever decisions are made regarding climate change, we make them on solid foundations.

Attn: All conspiracy theorists

The program you are puzzling over was used to produce a nice smooth curve for a nice clean piece of COVER ART.

http://www.uea.ac.uk/mac/comm/media/press/2009/nov/homepagenews/CRUupdate

I release you now back to your silly sport of spurious speculation.

>The program you are puzzling over was used to produce a nice smooth curve for a nice clean piece of COVER ART.

Supposing we accept your premise, it is not even remotely clear how that makes it OK to cook the data. They lied to everyone who saw that graphic.

“but hell, your paranoia ain’t going to change the science.”

Science. Ah yes. That’s that business where you:

Gather data, recording meticulously both the data and how you gathered it.

Analyse the data, explaining how you are analysing it, presenting program sources and so on if used, together with the data.

Draw conclusions.

Publish your work. Peer review filters papers for publication, it doesn’t validate them.

Others reproduce your work (or can’t) and validate your analysis (or not). If they can’t shake it down after trying, it stands, provisionally.

Notice any difference between this and the way that CRU appear to conduct themselves? Of course, there’s a way open to clear this up, and that’s to present the data, they’ve no doubt recorded so meticulously, and present the sources of the programs they used to analyse it, once again, all carefully documented with version control etc. Surely, they must be able to do this. After all this is work informing the IPCC and governments in trillion dollar programs.

Rob Crawford:

Before you step on that train saying that the CRU team ‘blacklisted’ their opposition, it’s worth knowing what that opposition was.

See my post here:

http://data-n-demagogues.blogspot.com/2009/11/peer-review-skepticism.html

In particular, Soon and Baliunas wrote their 2003 paper after having a nine year (Baliunas) and 7 year (Soon) gap in their professional publication histories, and three years after each of them took jobs at Washington DC think tanks.

They did at LEAST as much cherrypicking of dendro proxies as we’re accusing Mann and Briffa of. Hell, they didn’t even run their own data sets and algorithms, it was just a literature review.

Just because their paper holds to your preconception does not mean it should be held to any lower of a standard.

Hy dog in this fight is getting the science out. That means I need to hold the skeptical side to an even higher standard of rigor to avoid being blinded by things I want to hear.

At best, it might be evidence that building reliable temperature proxies using tree ring data is a hopeless endeavor.

That’s essentially game, set, and match.

The computer models collectively predict a fundamental “flatness” with carbon dioxide being responsible for the vast majority of all recent warming. That is, they predict a hockeystick. This isn’t exciting in the period 1850-2000, we’re basically in a hockeystick’s blade. (The tip is quite unexpectedly blunted, but that’s a separate issue.)

But when the global circulation models are used on pre-industrial conditions, they predict essentially unremitting flatness.

In other words: the models are falsified by the existence of a non-localized Medival Warm Period that isn’t caused by carbon dioxide somehow. (They never bother explaining the Roman Climate Optimum, but that’s just yet another fatal flaw in the best extant models.)

The key isn’t that the high church of warming has to stop screaming “Unprecedented!” It is that both the Little Ice Age and Medieval Warm Period aren’t properly modeled by the current state-of-the-art approaches. So either they didn’t exist – or the models suck. The tact used since 1998 is to scream “They’re just local phenomenon! Tree-reconstructions don’t show that!”

But…

it might be evidence that building reliable temperature proxies using tree ring data is a hopeless endeavor.

Don’t forget that the actual values they’re using for valadj are multiplied by 0.75. So plotting the literal array may give you a false impression (depending on what you’re looking for). Modified data is here

My first thought is that I doubt there’s a direct link between math ability and readable code.

However your last couple of sentences made me think that while I believe the above, remember that “readable code” is somewhat subjective. Someone could write nice readable code in russian or german and it’d mean absolutely nothing to me. Doesn’t mean it’s not (objectively) readable but I just don’t speak the language it’s readable in. More or less the same with you and math.

Having said that, it would be a rare(or practiced… i don’t discount it being a learn-able skill) individual that can write good comments while focusing on the math side of the brain. The code itself can be fine (if mathy) but i’d expect any text to be restatements of the math involved with effectively boilerplate text around it. I’d suggest that any heavily math based code with nice readable comments has probably been cleaned up after the fact.

P.S. the second quote should be attributed to Shenpen not ESR.

my bad.

“Can we say being good at math makes it less likely you write readable code?”

I suck at math but I also suck at writing readable code :(

http://allegationaudit.blogspot.com/

Just a visitor clicking through, but I figured I might as well share a response to a bit of dhogaza’s inanity:

A metaphor: A home is being built. The owner-to-be notices some wood labeled ‘Oak’ and recognizes the wood as actually being the much softer White Pine. He complains to the construction foreman, confronts the contractor, and, having been ignored by those, spreads word of the deception. At this point, a random person attacks the owner-to-be, saying, “Do you really think you can understand the field of domicile structural constitution by looking at one cord of wood?”

So i’m wandering through the various links to climategate posts and I came across this gem from ‘HARRY_READ_ME.txt’.

Is this the data they’ve been using? If so wouldn’t that mean that their error margin is at least 0.5degrees? Which would mean that the error margin is almost the size of the graph in question?

Am i making an incorrect assumption somewhere or do they actually want me to believe that any of their data points could be actually anywhere on that graph?

I wonder if anyone answered Bret’s comment about this actually leading to published work? I know most people’s hard drives have all sorts of crap on them, a bit of code to develop a graph might or might not have made it into something published and even then it only matters if it wasn’t properly documented.

I am sorry, but I have stick with tempest in a tea pot for this whole hacked archive.

Perhaps ‘ARTIFICAL’ is meant to imply that it’s not backed by theory, but simply designed to make the tree-ring data correspond with the thermometer data. In that case, the correction (if it actually does that) is quite justified, at least for making those data-sets correspond (and if it’s made obvious that such has been done, rather than used as a device to falsely claim that all sources agree).

All one has to ask here is: what would happen if this were data from a clinical pharmeceutical trial instead of a climate change modle?

Doctors paid by the drug company to run the trial wont release data and their notes state “falsified data that did not agree with hope-for outcomes. Death rate seems to high so we are ignoring it.”

In any other matter of similar or lesser importance, there would be no defense of the scientists in question. It is only the reiligious nature of the belief in global warming driving a defence of what is clearly at least questionable science. Add it actual data that conflicts with the models and really, really crappy models (I build them for a living) and it is clear what is going on with the defenders

@esr:

Nice word! :)

@shenpen:

Well, it’s not necessarily so that the code is unreadable. It’s possible for code to be ugyly and still be readable. :) See this article written by an ex-Microsoft employee. I’m sure others around here have other, similar articles. But I’ll bet no one has any that are as funny as this one. (Or maybe I just had a little too much wine with my dinner.) I’ve seen all sorts of stuff like that.

BTW, readability doesn’t have everything to do with variable names. Good variable names help, but they aren’t the only thing.

Excuse me for asking a rather basic question, but what do the valadj numbers mean? How are they applied to the data? I’m assuming they’re not degrees C – that would imply very easy-to-detect fudging.

It’s unfortunate that the project to use the info in the leaked emails to uncook the data and present it to the public openly and honestly seems to be regarded as low priority, compared to focusing on the lies for the AGW skeptics and rationalizing the lies for the AGW believers. Both sides claim to be interested in science over politics but they both seem relatively uninterested in the real data gathered by the CRU.

Thomas Covello- The code creates the “yrloc” array containing the values [1400, 1904, 1909, 1914, …, 1994]. It then creates a second array, “valadj”, that contains values that correspond element-by-element to the elements of the “yrloc” array. The final line interpolates between those entries to find a “yearlyadj” (yearly adjustment) value. For example, anything in or after 1974 gets a “yearlyadj” of 2.6*0.75, due to the string of 2.6 entries. The year 1920 falls between the entries 0.0 (for 1919) and -0.1*0.75 (for 1924), and would get a “yearlyadj” value of -0.015.

I notice another variable called “Cheat” in cru-code/linux/mod/ghcnrefiter.f90 in subroutine MergeForRatio

!*******************************************************************************

! adjusts the Addit(ional) vector to match the characteristics of the corresponding

! Stand(ard), for ratio-based (not difference-based) data, on the

! assumption that both are gamma-distributed

! the old (x) becomes the new (y) through y=ax**b

! b is calc iteratively, so that the shape parameters match

! then a is deduced, such that the scale parameters match

It seems to get the value of Cheat=ParTot-AddTot or Cheat=(ParTot/En) depending on a couple of things. It then gets added to New thusly:

New=Cheat+(Multi*(Addit(XYear)**Power))

Which then becomes the value of Addit(XYear)=New

Might be nothing, just a shortcut of some sort. But going by what we have seen so far, a variable named Cheat involved in an adjustment of any sort is suspect.

In fact, “New=Cheat+(Multi*(Addit(XYear)**Power))” looks a lot like “the old (x) becomes the new (y) through y=ax**b” mentioned in the subroutine comment except the comment doesn’t mention anything about something being added to it.

“But given that CO2’s role as a GHG has been known to physics for roughly 150 years…â€

Wow, Dhogaza seems to have eaten a bit of humble pie in the last few weeks. Good to see him falling back to safer territory – the hard science of laboratory CO2 physics. The odd thing about this is that the argument was never about CO2, it was about the use/abuse of questionable temperature proxies to manage our expectations of normal global temperature so that the term “unprecedented global warming” can be held over our heads as proof of our misdeeds. But we all know how fallacious this whole AGW debate is.

I were to bite, and I normally wouldn’t, I’d question how much we really know about how CO2 acts in the atmosphere. And supposing we accepted that an increase in atmospheric CO2 concentration causes a subsequent temperature rise (causation not correlation), my question would be – exactly how much actual warming does this produce? As far as I am aware, that answer based on laboratory CO2 physics is very well known and relatively small (does that make me a luke warmer?). The catastrophic warming projected in the IPCC AR4 depends on the assumption of positive feedbacks in the climate system that dramatically amplify the effects of the extra CO2; something that is even more uncertain than the temperature record- but that doesn’t seem to stop it being put into the climate models. I tend to find that when you know a little about this subject and can refute a lot of the supposed settled science, the arguments for AGW tends to boil down to this: It has to be CO2 because we have accounted for all other known variables. This is a bit rich coming from possibly the most complicated science ever undertaken in whose participants can’t even put together an accurate temperature record.

Also, please find a present my creative other half came up with when she read of the plight of out intrepid coder:

http://www.freeimagehosting.net/uploads/6fa0eea5a0.jpg

> “VERY ARTIFICALâ€

Yes, but the problem there is you found this by grepping for nasty sounding terms, so it’s not particularly incriminating if you find a few examples in a huge codebase. It’s not (as is suggested elsewhere) like picking a random board in a new house and noticing the wood is bad. It’s like nitpicking an entire construction project, picking the worst example of work you can find, and claiming the whole house is bad because of it.

So it seems for some cover art they “cheated” by using model data for dates they don’t have real data for, and adjusted it to match the pretty picture of the real data for recent dates. The real data apparently paints a far more severe problem than the model. Yes, it is a sign of some problem in the models, which nobody would claim are particularly accurate anyway. Are we supposed to take comfort in that!?

Read and understand the code before you speak!

#1 The variable is not actually used in the code.

#2 The values in the variable are in the context of tree ring data, not the climate model.

#3 Since the value is not actually used it is not clear what the units are for the correction. But a commented section of the code suggests it may once have been used as an input to a routine called filter_cru which, as far as I can tell, is not present in the leaked data. The assumption that the numbers are degrees is certainly without basis.

#4 This is a draft version of a routine. Later versions don’t even include the unused section. Those more developed and commented routines seem like more obvious candidates for the code that would have been used to generate publication quality output, not some scientists scratch paper.

#5 The legend for the plot(also unused) indicates that the correction’s usage was clearly indicated if a different version of the code actually used the correction.

plot,[0,1],/nodata,xstyle=4,ystyle=4

;legend,[‘Northern Hemisphere April-September instrumental temperature’,$

; ‘Northern Hemisphere MXD’,$

; ‘Northern Hemisphere MXD corrected for decline’],$

; colors=[22,21,20],thick=[3,3,3],margin=0.6,spacing=1.5

Oh, one other point. The file is written in IDL NOT FORTRAN

There is also a bug in *your* code.

valadj=[0.,0.,0.,0.,0.,-0.1,-0.25,-0.3,0.,- 0.1,0.3,0.8,1.2,1.7,2.5,2.6,2.6,$

2.6,2.6,2.6]*0.75

If you notice the “*.75” than means vector multiply by 3/4, you don’t do this in your output. Your values are incorrect.

Amazing, I had no idea computer programmers were so qualified to make so many claims about climate science. Some computer programmers are also apparently gifted in mysticism as they are able to come to conclusions without any knowledge or context. Peer review journals are another interesting topic computer programmers would know plenty about. After all, computer science is communicated mostly through conferences instead of peer review journals.

With so much talk about valid science being published in peer review, I would think people would be happy about the pressure placed upon the journal in question by climate scientists. But of course, some programmers may not have looked deeply enough into their crystal ball to see that the papers in question were faulty and were written for political purposes. Or am I misunderstanding the claims being made here? Perhaps some programmers feel that faulty papers should be published for political purposes in science journals. Perhaps some programmers believe that science journals should be more like the humanities journals, and people should just spend their time arguing how they feel, In fact, why not just allow everyone to publish whatever in a journal no matter if it is right or wrong.

I’m also amused at how some programmers applied their notions about things they aren’t even qualified to judge. Since some programmers believe the calculations of tree data were wrong or falsified, they have assumed all calculations by all organizations and disciplines from around the world on a variety of different sources (ice, sats, etc) are wrong too. To make matters more interesting, the same programmers had no clue about the purpose or usage of said code.

I’m also curious how everyone seem to believe that climate scientists are hiding all the data. Climate scientist must be conspiring to fraud the public if they are unable to talk commercial weather servers into providing their source of revenue to the public for free. Of course, all of these organizations funded by the fossil fuel industry could pay the commercial entities and get their own access to the data but perish the thought. Of course, people could get public data sets and models….

http://tamino.wordpress.com/climate-data-links/

But hidden data ( or should I say commercially owned data) sounds more interesting and important. Since the public data shows all the trends of global warming, perhaps the unavailable data-set from commercial weather services show the smoking gun. EH?

Junk science indeed.

So let me get this straight. The Global Warming Believers (hence referred to as GWB’s, which is ironic since the former US President with the same initials was labeled by the GWB’s as “stupid, moronic, etc.” while GWB wasn’t a confused GWBer) tell us that these are just snarky e-mails that don’t prove anything (supressing alternate evidence and denouncing those who provide it) AND that the computer code is also explainable (sure, ARTIFICAL refers to last nights Antiques Roadshow episode…..not some public school idiot who can’t spell, MUCH LESS WRITE COMPUTER CODE).

YOUR god is DEAD!!! Your prophet Gore has been PROVEN a liar by the same data he uses to gain (the other) profits!

This needs to be OPENLY investigated, I suspect that others have manipulated data for greed (federal funding). Strange how all scientists funded by Gov’t. get a pass as being altruistic (Gov’t wanting more taxes and control of your life) while any past affiliation or funding by ANY corporation is suspect. If you’re backed by the PETA, Planned Parenthood, and ACORN ilk you’re a saint. Fill up your pick-up truck at a BP gas station and you’ve become their “mouth piece”.

On a personal note, I feel sorry for these people who just got SMACKED in the face with reality. It’s like when my kids found out that I was Santa Claus. “But, but, but…..how about when…?”. They busted me when they CAUGHT me putting gifts under the Christmas Tree labeled “From: Santa”.

In the end, your seemingly “altruistic” Santa Claus turned out to be a lie. The “Tooth Fairy” and the “Easter Bunny” can’t help you out because they are as real as Santa Claus is (and I know what the definition of “is” is).

GWB’s seem to be taking the Larry Craig “Wide Stance” response. Way funny !

Truthfully, this is like the “palm reader” in my town who’s house burned down. It’s strange how she could predict everyone else’s future FOR MONEY and she couldn’t see herself in the hospital or being homeless. After the fact, the Crystal Ball person TOTALLY saw this coming.

The moral of the story is: Don’t believe people who CLAIM to be able to predict the future, computer programs that can’t be reviewed are as accurate as “crystal balls”, and that they think that they “have the FORCE” behind them like obi wan kenobi, “These aren’t the FACTS that we’ve been looking for. Move along, move along”.

As an afterthought, the GWBs are caught in the same scandle as the Catholic Church pediphiles are. Supressing information and covering up known problems, all for the sake of their “religion”. Wanna bet that if Mann got fired, he’d get huge money to lecture at colleges and tenure at another school??? Relocating.

These people have to play hard and up their game now. Otherwise they’ll be marginalized as the HACKS and LIARS that they are.

We’re supposed to believe that their computer model that can’t predict the past is going to predict the future. Meanwhile, Bill Gates has smarter people than the IPCC and can’t get Vista or Windows 7 to work right.

It’s strange how these people can decry a 1500 year old book (the Bible) as fantasy while claiming a PROVEN fraud computer program can predict the future.

Talk about faith…..and being stupid!

Interesting comment by Mark Sawusch on RealClimate:

http://www.realclimate.org/index.php/archives/2009/11/the-cru-hack-context/comment-page-14/#comment-144828

Includes a quote from a paper: “To overcome these problems, the decline is artificially removed from the calibrated tree-ring density series, for the purpose of making a final calibration. The removal is only temporary, because the final calibration is then applied to the unadjusted data set (i.e., without the decline artificially removed). Though this is rather an ad hoc approach, it does allow us to test the sensitivity of the calibration to time scale, and it also yields a reconstruction whose mean level is much less sensitive to the choice of calibration period”

Sounds like our VERY ARTIFICIAL correction, described openly some time back, along with what it was used for and why. If so, it’s neither hidden nor fraudulent. There may be other problems with this (the commenter mentions some) but the cannon might have cooled down suddenly.

>Would it be possible for you to rerun this using constant data as an input and graph it?

One of the things Ross McKitrick did, allready years ago, was just that. Feed noise in to the model and see what came out.

Read this:

http://www.uoguelph.ca/~rmckitri/research/McKitrick-hockeystick.pdf

For those that have been following the debate surrounding the hockey stick the whole “Climategate” scandal does nothing but confirm stuff that was allready known.

See http://www.climateaudit.org

Have a look at the last part of the pr_decline.pro file. They are fitting a parabola to the data set, constrained to pass through a point created by averaging the temperatures between 1856 and 1930 and placing it at 1930, and then reducing temperatures that fall above it (and leaving those below it alone). The effect is not only to reduce the 1930s temperatures, but also to add a parabolic effect to the data, making it look like temperature increase is accelerating. If this sort of filter is commonly applied, it’s no wonder that the 1990s show such a steeply rising curve.

Um, you do realize you’re dramatizing over the code to generate a piece of artwork, right?

It’s a POSTER, people, not a “graph deciding how trillions will be spent.”

I applaud the zeal of your inquiry, but shouldn’t you apply it to data that actually matters, rather than dissecting cover art?

It’s just this sort of thing that makes the anti-science movement look…well, kind of dumb.

Just asking.

CBB

That code adjusts tree ring data, NOT temperatures. But still, it is data cooking.

You’re forgetting to mention what it hides a decline in, and how.

The code in question fudges for a divergence between temperature as calculated from tree growth data (which has declined since about 1960), and temperature as actually measured in every other way (which shows warming). Your blog post horribly misrepresents this as some kind of fudge applied to the global temperatures reported as evidence of global warming; it isn’t, and they don’t even use tree growth data over the period in question.

dhogaza Says:

November 25th, 2009 at 3:21 pm

OK, engaging ranting lunatics has been humorous for the last half hour or so, but I’m done here.

Have fun with your conspiracy theories and your misreading of science!

Well, since they won’t release their data, or their methods; it can’t be replicated. Therefore what they are doing is not science.

C.B.B., I think I can answer your question properly.

*ahem*

Yeah, poster art that was used to convince policy makers that we have a CRISIS on our hands, which is now known to be a LIE and a HOAX made by Marxist Communist Socialist AL GORE to institute a Communist Socialist ONE WORLD GOVERNMENT and to TAKE ALL OUR MONEY!!! Why are you excusing a clear example of MASSAGING DATA that will be used to TAKE OVER THE WORLD by Socialist Communist Kenyan Muslim OBAMA and his little crony Socialist Communist Nazi Climate Hoaxer AL GORE!! NOW EXPOSED TO BE A LIE AND A HOAX!!!!!! HOAX AND A LIE!!!!

You know who else used posters to convince people there was a CRISIS?

Hitler.

Socialist Communist AL GORE and these so-called scientist/propogandists are now EXPOSED as being JUST LIKE HITLER!!!! HOAX!!!! LIE!!!! AL GORE!!!!! HITLER!!!! SOCIALIST!!!!!

How come the “skeptics” here so easily swallow everything AGW-critic without any kind of skeptic thinking?

How come you throw quotes and code-snippets around and ignore the context.

Read the code after the snippet.

That adjustment array produced wasn’t even applied to any data.

It’s not used anywhere.

Read more:

http://allegationaudit.blogspot.com/2009/11/mining-source-code.html

“It’s just this sort of thing that makes the anti-science movement look…well, kind of dumb.”

The anti-science movement? That would be the folks who delete data rather than share it, corrupt the peer review process, and consider “skeptic” an insult?

>It flattens a period of warm temperatures in the 1940s 1930s — see those negative coefficients?

>Then, later on, it applies a positive multiplier so you get a nice dramatic hockey stick at the end of the century.

If you read the code you’ll see that (a) this is not a ‘multiplier’ it’s additive (it couldn’t be a multiplier otherwise those negative numbers would have resulted in negative maximum temperatures in the 1930s and those zeroes would have meant 0 degrees in the early part of the century) and (b) the yearlyadj part is not actually used in the code.

ESR says: I don’t understand this assertion. I can see where it’s used.

http://wattsupwiththat.com has a couple of great articles on this same subject (scroll down)

For some deeper insight into dendro and its problems, it’s definitely worth getting up to speed on Climate Audit.

Incidentally, if the code was open source all along, poor Steve McIntyre and friends would be having a *much* easier time of it.

“This, people, is blatant data-cooking, with no pretense otherwise.”

I don’t think so.

If you look at the code in its entirety, you’ll see that the only line that uses yearlyadj is commented out and there are no references to anything in this code snippet code that isn’t commented out. So they calculate this “VERY ARTIFICAL correction for decline”, but it never actually gets used. Had you shown the very next few lines of code that would have been clear:

yearlyadj=interpol(valadj,yrloc,timey)

;

;filter_cru,5.,/nan,tsin=yyy+yearlyadj,tslow=tslow

;oplot,timey,tslow,thick=5,color=20

;

filter_cru,5.,/nan,tsin=yyy,tslow=tslow

oplot,timey,tslow,thick=5,color=21

Note that the line that uses yearlyadj is commented out – the line that replaces it does not use yearlyadj

That’s quite a trick to get commented out code to cook data….

Just been posting some stuff at Bishops Hill http://bishophill.squarespace.com/blog/2009/11/26/smoking-gun.html

In pl_decline.pro it loads

; Use the calibrate MXD after calibration coefficients estimated for 6

; northern Siberian boxes that had insufficient temperature data.

print,’Reading MXD and temperature data’

it does a whole load of stuff then it does something odd

Pre 1930 (or 1900 when running the program in regression mode) it calculates an average decline value of the average of everything before 1930.

Post 1930 and up to 1994 it fits the data to a formula = 1930value + (1/7200) * (year – 1930)^2 which is a parabola.

Finally it seems to remove the 1930 value offset (everything pre 1930 = 0 ?) and post 1930 is a curve starting from 0.

Then it saves the values – the original 1930 value to a file implying they are corrections (this could just be lazy programmer bad variable naming)

save,filename=’calibmxd3’+fnadd+’.idlsave’,$

g,mxdyear,mxdnyr,fdcalib,mxdfd2,fdcorrect

Plot this in excel if you want to see the curve the data is fitted to. Not sure where the data goes next but its a very odd thing to do.

year f

1930 0.252306

1931 0.252444889

1932 0.252861556

1933 0.253556

1934 0.254528222

1935 0.255778222

1936 0.257306

1937 0.259111556

1938 0.261194889

1939 0.263556

1940 0.266194889

1941 0.269111556

1942 0.272306

1943 0.275778222

1944 0.279528222

1945 0.283556

1946 0.287861556

1947 0.292444889

1948 0.297306

1949 0.302444889

1950 0.307861556

1951 0.313556

1952 0.319528222

1953 0.325778222

1954 0.332306

1955 0.339111556

1956 0.346194889

1957 0.353556

1958 0.361194889

1959 0.369111556

1960 0.377306

1961 0.385778222

1962 0.394528222

1963 0.403556

1964 0.412861556

1965 0.422444889

1966 0.432306

1967 0.442444889

1968 0.452861556

1969 0.463556

1970 0.474528222

1971 0.485778222

1972 0.497306

1973 0.509111556

1974 0.521194889

1975 0.533556

1976 0.546194889

1977 0.559111556

1978 0.572306

1979 0.585778222

1980 0.599528222

1981 0.613556

1982 0.627861556

1983 0.642444889

1984 0.657306

1985 0.672444889

1986 0.687861556

1987 0.703556

1988 0.719528222

1989 0.735778222

1990 0.752306

1991 0.769111556

1992 0.786194889

1993 0.803556

1994 0.821194889

pre 1930, judging by this comment in the code everything is set to the 1930 value

;*** MUST ALTER FUNCT_DECLINE.PRO TO MATCH THE COORDINATES OF THE

; START OF THE DECLINE *** ALTER THIS EVERY TIME YOU CHANGE ANYTHING ***

;

Besides the comment from Patrick on November 26th, 2009 at 12:57 am, this was answered here: http://www.realclimate.org/index.php/archives/2009/11/the-cru-hack-context/comment-page-15/#comment-144890

Now, instead of jumping to conclusions you could have asked Gavin at Realclimate.org, using this advice: “Try to find an answer by asking a skilled friend.” He’s been very responsive and if framed properly (“Hasty-sounding questions get hasty answers, or none at all. The more you do to demonstrate that having put thought and effort into solving your problem before seeking help, the more likely you are to actually get help.”) he would have gladly answered, although “Never assume you are entitled to an answer. You are not; you aren’t, after all, paying for the service. You will earn an answer, if you earn it, by asking a substantial, interesting, and thought-provoking question — one that implicitly contributes to the experience of the community rather than merely passively demanding knowledge from others.”

Oh yeah, “Don’t rush to claim that you have found a bug.”

>Now, instead of jumping to conclusions you could have asked Gavin at Realclimate.org,

realclimate.org is a wholly-owned subsidiary of the “hockey team”. One of the leaked emails offers it for use as a propaganda arm.

Correct. As the code is now, the line that uses yearladj is, in fact, commented out. But the fact that the code is in there but commented out shows that the code was likely used to generate at least some graphs.

Given the prevalence of filter_cru in the programs (it appears to be called in 191 different files) it might be reasonable to assume that it does some sort of standard data massaging to the various data sets. Since, as you say later, we don’t know what this function provides, we don’t necessarily know that the yearlyadj variable is not used against the climate model.

Perhaps, but we don’t really know what was used to generate the publication output, do we? We can only guess.

That’s a disingenuous statement at best. Just looking at the code, we don’t know what lines were commented or uncommented when the routines were run, or why the routines were run, or which particular runs were used to generate the particular graphs.

What we do know is that at least some point there were “VERY ARTIFICAL” corrections used to “hide the decline.”

What is “the decline” and why did they need to hide it?

I suspect the adjustment code was commented out because the underlying data had been “adjusted” so the graph itself no longer needed to adjust for it. The fact that the entire series of data since 1904 was overlayed with these adjustments is pretty telling. The MXD reconstructions since 1960 from tree-ring data is what was suppose to be in question. Why completely replace data since 1904 instead of starting in 1960?

“Besides the comment from Patrick on November 26th, 2009 at 12:57 am, this was answered here:”

Oh, in that case we can all move on. Thanks for clearing it all up.

We need to draw a line under this and progress.

A good way of drawing the line would be to have an audit of CRU, their data management procedures, their code development procedures, QA, archiving, all that boring stuff. I’m sure they’ll come up with a clean bill of health, after all, it must be stuff they’ve been doing as routine. It’ll cost a few million but it will do far more to silence the doubters than hand waving about how this was used to produce some poster artwork.

The question is why was there ever a need to fit a data set to a computed ie manufactured hockey stick trend with hardcoded values pre 1930 OK, post 1930 softly increase.

Whether it was for a poster, an IPCC report or an internal powerpoint presentation. Questions have to be asked, they may have a very boring and reasonable answer but they do have to be asked.

>realclimate.org is a wholly-owned subsidiary of the “hockey teamâ€. One of the leaked emails offers it for use as a propaganda arm.

Instead of addressing RealClimate’s argument, you are attacking the blog itself. This is, of course, a well known fallacy called “ad hominem.”

E.L. writes “Amazing, I had no idea computer programmers were so qualified to make so many claims about climate science.”

The same can also be said in reverse, “Amazing, I had no idea climate analysts were so qualified to make so many claims about computer science”. Or more specifically, write code that seems difficult, if not impossible, to verify when its use is for something as important as climate change.

I am a software engineer and I make no pretense about knowing much about climate. In reviewing original Fortran code within the cru-code directories (these are Fortran, not IDL) I did find one programming defect in one set of code that I examined. However, each and every time I have a mentioned the specifics of such things (which I’m omitting here) in a blog comment I have specifically noted that this (or other issues I have raised) may or may not be a problem. For example, the defect I found only occurs if the data exceeds a certain range (the range is tested but then the following code is incorrect and does not fix the problem). Without the original data, it is entirely possible that this “error correction fix” is never executed, and thus, never has any impact on the output.

It is fair to ask questions about issues that seem odd. But that does not necessarily mean the code or the model is wrong – in much of the code I have looked out, there is essentially zero comments. For example, one Fortran source file (of which there are dozens) has over 1,000 lines of source code and 6 comment lines at the beginning explaining only how to compile the file with the other source file. Without a specification or a test plan, we have no way of knowing what is considered the “correct answer” for the output. (One definition of “quality” is it “conforms to the specification” – which unfortunately leaves open the problem of what is quality if the spec is wrong … but that is another story.)

There is much that I have viewed in the code that ranges from defective, to poor programming practices, to data adjustments that seem odd – but even in the case of the defect, I cannot say that it has caused a problem (code may never have been executed, as above example explains).

There are other issues – was there ever a spec for the code? Why did they implement their own DBMS in many thousands of lines of Fortran (see cru-code directory) versus using an off the shelf, tested and considered reliable DBMS? What was their test plan? Test scenarios? Test scripts?

These are reasonable questions to ask.

Would I fly in an airplane that was built based upon aerodynamic model simulations having this evident s/w engineering quality? No.

Climate change is a serious subject. However, some of the code I have seen (again, I’ve focused on the cru-code directory) gives the impression that the climate analysts did not take their software development as seriously as a serious subject should be treated. They oh it to us to treat the code as seriously as they treat the climate issues – and it is not clear that they have taken their s/w quality seriously. (Read the HARRY_README for further concerns on that topic.)

But I am trying to be fair and not second guessing the intent of the code (I’m not playing climate analyst) and I am not drawing conclusions – except for the one that the overall impression of SQA is not good. But I also expect that climate analysts will at least respect that there are reasons that modern s/w development has advanced beyond hacking.

To summarize – its fair to examine the code and ask pertinent questions. I discourage jumping to conclusions – and that applies to both those who say “there’s nothing to see here” and those who are crying “fraud”.

re the various comments saying that this project was to generate a graphic for a piece of artwork.

According to Gavin at RealClimate.org:

“HARRY_read_me.txt. This is a 4 year-long work log of Ian (Harry) Harris who was working to upgrade the documentation, metadata and databases associated with the legacy CRU TS 2.1 product, which is not the same as the HadCRUT data (see Mitchell and Jones, 2003 for details). The CSU TS 3.0 is available now (via ClimateExplorer for instance), and so presumably the database problems got fixed. Anyone who has ever worked on constructing a database from dozens of individual, sometimes contradictory and inconsistently formatted datasets will share his evident frustration with how tedious that can be.”

No institution would assign someone to a project lasting 4 years for a piece of artwork.

I can certainly understand the tendency of people to minimize what may be potentially damaging information, but try at least to do it in a credible and not so easily disproved way.

Here’s the link to Gavin’s post:

http://www.realclimate.org/index.php/archives/2009/11/the-cru-hack-context/

>realclimate.org is a wholly-owned subsidiary of the “hockey teamâ€. One of the leaked emails offers it for use as a propaganda arm.

Cop-out. You didn’t even try to get more information. Apparently you didn’t even read further down to find out whether the ARTIFICAL correction had been used in this iteration. Was this procedure used in the final product? Apparently not.

So if this was just part of a work in progress (labeled boldly and conveniently “ARTIFICAL correction” maybe so nobody accidentally used it? I can speculate as well) and never saw the light of day, why is it such a big deal?

BTW, when I submit a manuscript for publication, do I need to submit all the drafts, showing my various errors, as well?

I think everyone misses the point about the ARTIFICAL CORRECTION.

The takeaway is this: If 40 years of tree ring data diverges from the last 100 years of instrumental records, how can you have ANY confidence in tree-ring data as a proxy for temperature?

How do you know the entire tree-ring record isn’t full of such divergences giving you spurious and inaccurate recostructed temperature in the period prior to the instrumental record?

No it isn’t. You could at least _try_ to read the whole code you’re referencing.

You can tell there’s more Sociology going-on than Science.

Wow. Just… wow. As Eric said in “Ego is for little people”, he’s “unusually capable” of admitting and correcting his own errors. I’m sure we’ll see a correction or retraction here any minute.

>I’m sure we’ll see a correction or retraction here any minute.

As other have repeatedly pointed out, that code was written to be used for some kind of presentation that was false. The fact that the deceptive parts are commented out now does not change that at all.

It might get them off the hook if we knew — for certain — that it had never been shown to anyone who didn’t know beforehand how the data was cooked and why. But since these peiple have conveniently lost or destroyed primary datasets and evaded FOIA requests, they don’t deserve the benefit of that doubt. We already know there’s a pattern of evasion and probable cause for criminal conspiracy charges from their own words.

Were the instruments as sensitive in the 1930’s as they were in later decades? I would image not.

I think it would be necessary to apply a correction to the older data to bring it in line with current accuracy levels. I am not claiming they got the correction right (I think that would be impossible because we simply did not have sensitive instruments then), but it seems as if it would be more dishonest to display the data without correction, no? If anything, should we not be looking closely at where they derived their correction algorithm, instead of jumping to conclusions about motives?

..Ch:W..

For what? Commented-out code is not being used now, but the fact it was in there strongly suggests it was used at some point. Even if it wasn’t used in the final product, there was a stage at which someone thought “fudge” was required. We need to know about that. What other algorithms were tried and found wanting? When data-culling was done, what criteria determined that certain samples were unreliable? Was it nothing more than that those samples didn’t confirm the hypothesis? We need to know that, too.

The problem with the AGW folks is that they haven’t shown us the raw data and the methods they used to produce their Hockey Sticks. The emails uncovered recently indicate that their response to FoIA requests was to delete them rather than reveal them. If you don’t show your observed data, and all the algorithms (including computer code) used to produce your charts and graphs, so that other researchers can repeat your work and confirm it, you are not doing science.

To all the various folks defending CRU and realclimate.org:

There’s one very simple way to end all of the arguing – release the raw base data and the methodologies applied to make it useful. Oh, you can’t do that because the data were “accidentally” deleted.

Neither CRU nor GISS has shown any willingness to allow open review of any of their models – neither the math nor the code. Both have fought tooth and nail to prevent the release of any data or methods.

And now, it seems, CRU has actively destroyed data and conspired to delete legally-protected communications rather than turn them over to “hostiles” for analysis.